Technical SEO Audit

The coverage of technical SEO and on-page SEO varies as they are interlinked. In this tutorial, we cover the following three areas of technical SEO.

- Index-ability

- Page experience issues (primarily speed, mobile friendliness, and security)

- Schema Markups (Structured data, Rich Results)

Index-ability

Indexing is often the first challenge for new websites. You can check the number of indexed pages on your website using Google Search Console.

You can also use Google Search Engine to check which pages are indexed under a particular domain by typing site: DOMAIN (e.g., site:example.com) in the search window. As information on Google Search Console may not be updated, you can use this approach to check the latest indexing status.

There needs to be more than just knowing how many pages are indexed to check indexability. Pages must be crawlable and attract users so they can be indexed.

Several factors accelerate or decelerate page indexing. In this section, we'll explain the main factors at a high level. Chapter 11 will cover the actual implementation guide.

Sitemap.xml

Sitemap.xml is a sitemap for crawlers. It should contain all the web page URLs that need to be indexed.

If the website does not have many pages (e.g., less than 100 pages), you may not need to implement sitemap.xml. However, if you have many pages on your website, having sitemap.xml improves your website's crawlability.

The file should be placed in the root directory of the website. To signal the existence of sitemap.xml to search engines, you can submit the URL of the sitemap on Google Search Console.

Sitemap.xml Example

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>http://www.example.com/</loc>

<lastmod>2024-02-20</lastmod>

<changefreq>daily</changefreq>

<priority>1.0</priority>

</url>

<url>

<loc>http://www.example.com/about</loc>

<lastmod>2024-01-15</lastmod>

<changefreq>monthly</changefreq>

<priority>0.8</priority>

</url>

:

</urlset>

Robots.txt

Robots.txt is a text file that instructs robots (crawlers) which pages should or shouldn't be crawled. The file should be located at the root of the website. The instructions are applied to all the websites instead of the meta robots tag. As robots.txt can block crawlers from crawling particular pages, you need to check if the instructions are described properly.

Robots.txt Example

# robots.txt for http://www.apple.com/

User-agent: *

Disallow: /*/includes/*

Disallow: /*retail/availability*

Disallow: /*retail/availabilitySearch*

:

Sitemap: https://www.apple.com/shop/sitemap.xml

Sitemap: https://www.apple.com/autopush/sitemap/sitemap-index.xml

Sitemap: https://www.apple.com/newsroom/sitemap.xml

Sitemap: https://www.apple.com/retail/sitemap/sitemap.xml

Sitemap: https://www.apple.com/today/sitemap.xml

Noindex and nofollow (meta robots tag)

If you don't want to index a specific page, you can add the instructions on the page using the meta robot tag. The noindex directive is often used together with the nofollow directive. The noindex directive tells search engines not to include a specific page in their search results. The nofollow directive tells search engines not to follow the link or pass any page value (link juice) to another page.

As the noindex directive can block crawlers from crawling particular pages, you need to check if it is properly used.

Noindex and Nofollow Example

<meta name="robots" content="noindex, nofollow">

Duplicate pages and canonical tag

As search engines don't want to index the page with duplicate content, such content can confuse search engines and cause a penalty from search engines.

To tell search engines which version of a page is preferred, you can use the canonical tag to avoid penalties for duplicate content.

It is a good practice to use the canonical tag on all indexed pages. You also need to ensure that the URLs are the same as those in the sitemap.xml.

Search engines handle HTTP URLs vs. HTTPS URLs and www URLs vs. non-www URLs as different URLs. You need to add the canonical tag even on the homepage.

The image below shows an example of a canonical tag.

Canonical tag example

<link rel="canonical" href="https://example.com/example-page">

404 error and redirection

404 error occurs when a page cannot be found. This issue may occur when you modify a web page's URL.

If the links to the page or the URLs in sitemap.xml are old versions, crawlers may encounter the 404 error.

If you change the URL of the web pages, you need to set redirections (such as 301 redirects) to send users and crawlers to a new URL from the one they originally requested.

Site structure and internal links

As crawlers crawl web pages through hyperlinks, a well-structured website with proper internal links helps them crawl the site efficiently.

If you have addressed the above points, but your website pages are not indexed, the website may have structure or internal link issues. You need to be especially careful when you are using JavaScript to build your website.

As explained in the on-page SEO assessment section, if you are not using <a> tags, crawlers may not recognize it as a link properly. You can easily check the link status using Chrome extensions such as SEOquake and SEO META in 1CLICK.

To understand how to manage indexing better, go to this tutorial: Chapter 11. Indexing Your Website.

Page performance

As Google emphasizes that page experience is an important factor in ranking results, improving website performance is a critical part of SEO, and its importance is increasing.

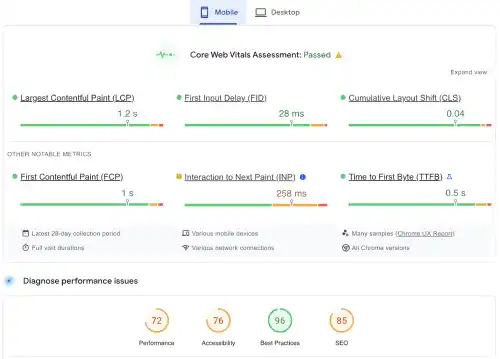

Core Web Vitals

Google introduced Core Web Vitals as a set of specific factors to determine a webpage's overall user experience from the speed point of view. Core Web Vitals include:

- Largest Contentful Paint (LCP): Measures loading performance. To provide a good user experience, LCP should occur within 2.5 seconds of when the page first starts to load.

- Interaction to Next Paint (INP): Measures interactivity, the responsiveness of a web page by quantifying the delay between user interactions (such as clicks, taps, or key presses) and the visual response or feedback from the page. INP is a newly developed metric that replaced First Input Delay (FID) as one of Core Web Vitals on March 12, 2024.

- Cumulative Layout Shift (CLS): Measures visual stability. To ensure a good user experience, pages should maintain a CLS of 0.1 or less. CLS quantifies how often users experience unexpected layout shifts—a low CLS ensures that the page is stable as it loads.

We'll explain these metrics in Chapter 13. Optimizing Website Performance.

Google Search Console shows how many pages on a website perform poorly, need improvement, or are good. To get more insights on Core Web Vitals, you should use Lighthouse or PageSpeed Insights.

Lighthouse and PageSpeed Insights

Both Lighthouse and PageSpeed Insights are tools for assessing page performance. Although their assessment coverage and user interfaces are different, they can be used for the same purposes.

Lighthouse is available as a Chrome extension and in Chrome Developer Tools, while PageSpeed Insights is accessible through a dedicated web page.

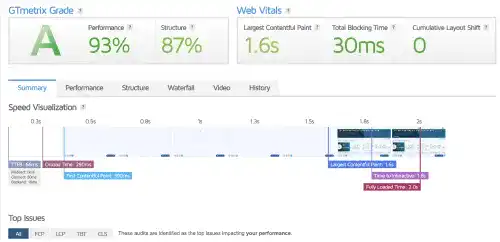

Gtmetrix

If you need more detailed insights, GTmetrix is a good tool. There is a quota limitation, but you can use it for free after signing up.

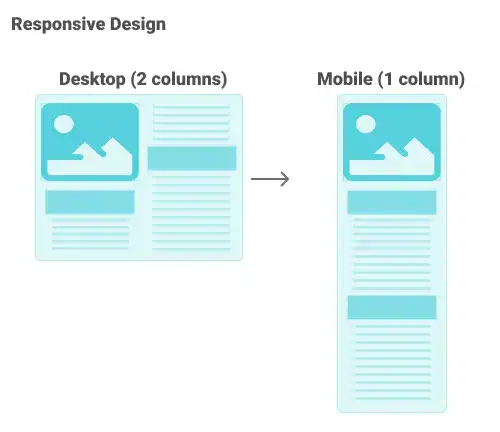

Mobile-friendliness

Google prioritizes mobile-friendly sites in its search rankings (mobile-first indexing). Check Google Search Central - Mobile site and mobile-first indexing best practices.

From an audit point of view, key checkpoints are whether the page applies responsive design and whether the content is clearly visible on small devices.

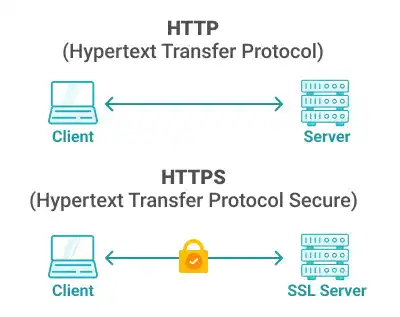

HTTPS

Due to security concerns, using HTTPS is necessary when launching a website.

You can check HTTPS status of your website using Google Search Console.

To use HTTPS, you need to set up SSL (Secure Sockets Layers). To understand it, you can refer to SSL Setup.

Schema markups

(Structured data, Rich Results)

Schema markup is code in a particular format (Structured Data) that helps search engines understand the meaning of the information and allows them to return more informative results for users (Rich Results).

Implementing Schema Markups is not the first priority if you haven't done other basic SEO; however, they are powerful tools for attracting users on SERPs and improving CTR.

From the Technical SEO assessment point of view, you can check how many pages have schema markups and if they were appropriately implemented. Google also provides a Rich Result Test as a Search Console feature to verify if webpages are eligible for Rich Results in search.

To learn more about schema markup, go to Chapter 12. Implementing Schema Markup.