The Five Essential Tips for Web Page Indexing

As search engines follow specific rules to index your website and pages, you need to know the rules. Here, we'll explain 5 key points that can help you to index your website.

Google Search Central provides a comprehensive guide about indexing pages. Please refer to Overview of crawling and indexing issues.

Implement sitemap.xml

A sitemap.xml file lists all important pages of your site to ensure Google can discover and crawl them. Submit your sitemap through Google Search Console to help indexing of your content efficiently. The way to create sitemap.xml can differ depending on the technology platform of your website. If you use WordPress, the easiest way is to use plugins such as Yoast SEO.

You can also create sitemap.xml manually using a text editor. When you make the sitemap file yourself, you must ensure it is placed in the website's root directory.

This is an example of a sitemap.xml file.

Sitemap.xml Example

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<url>

<loc>http://www.example.com/</loc>

<lastmod>2024-02-20</lastmod>

<changefreq>daily</changefreq>

<priority>1.0</priority>

</url>

<url>

<loc>http://www.example.com/about</loc>

<lastmod>2024-01-15</lastmod>

<changefreq>monthly</changefreq>

<priority>0.8</priority>

</url>

:

</urlset>

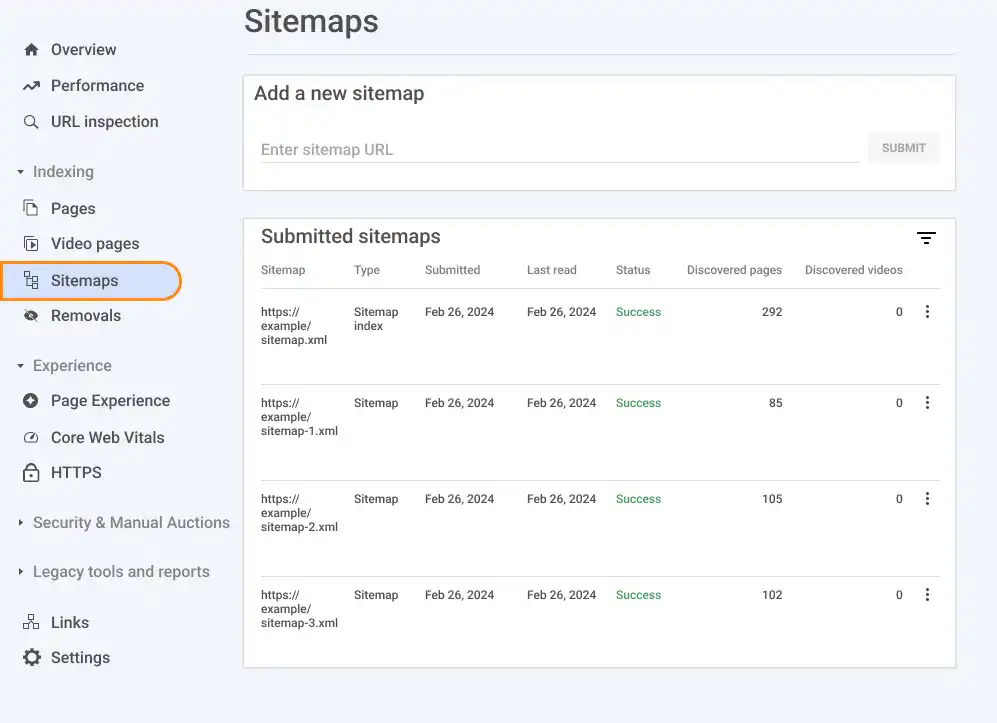

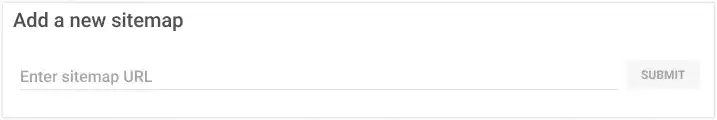

Once the sitemap file is placed, Google needs to recognize it. You can check the sitemap status in Google Search Console by selecting sitemaps on the left sidebar.

If your sitemap still needs to be recognized by Google, submit its URL through the Search Console.

Sitemap.xml is accessible publicly. You can check if your sitemap is online. For example, this is Apple's sitemap.xml.

Apple's sitemap ( https://www.apple.com/sitemap.xml )

This XML file does not appear to have any style information associated with it. The document tree is shown below.

<urlset xmlns="http://www.sitemaps.org/schemas/sitemap/0.9">

<script/>

<url>

<loc>https://www.apple.com/</loc>

</url>

<url>

<loc>https://www.apple.com/accessibility/</loc>

</url>

<url>

<loc>https://www.apple.com/accessibility/assistive-technologies/</loc>

</url>

:

</urlset>

Sitemap.xml has a size limitation. One sitemap file should be at most 50MB (uncompressed) and include no more than 50,000 URLs. You can split sitemaps using a nesting structure when you create a parent sitemap.xml that refers to child sitemaps.

Avoid misuse of the noindex tag (robots directive in the meta tag)

To prevent search engines from indexing a webpage, you can use the noindex meta tag. This tag tells search engine crawlers that the page should not be added to their index, so it won't appear in search results. Below is an example of the noindex tag. It is often used with the nofollow tag, instructing search engines not to pass authority or influence to the linked page.

Noindex and Nofollow Example

<meta name="robots" content="noindex, nofollow">

You should use the noindex tag only on the pages you don't want to show in search results, such as duplicate pages, private pages, or temporary content.

If you mistakenly add the noindex tag on the pages you want to be indexed, the pages won't be indexed.

Also, the noindex tag doesn't address security concerns. Search engines may find the page from hyperlinks. If you want to prevent the pages from being publicly accessible, you need to find another way to block public access to them, such as user login.

Removal from Index

If a page was previously indexed and you've added a noindex tag, it may take some time for search engines to revisit the page, see the noindex directive, and remove it from their index.

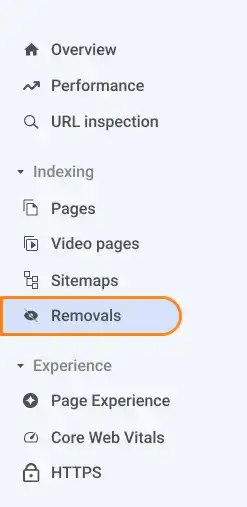

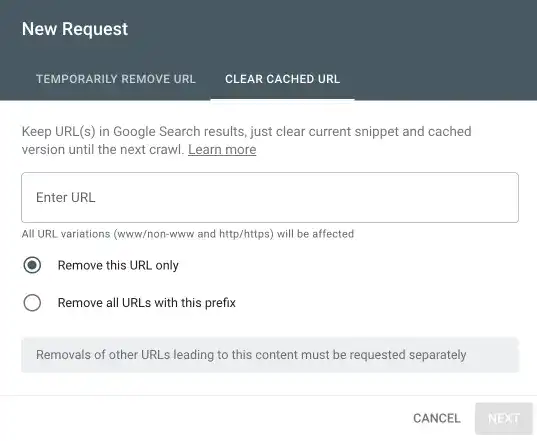

If you want to remove the pages from the Index quickly, you can request removals through the Search Console. Select Removals on the left sidebar in the Search Console.

Press the NEW REQUEST button and submit a URL for removal.

Use robots.txt accurately

The robots.txt file provides instructions to search engine bots. Using the robots.txt file, you can disallow crawlers to crawl particular pages. You can also let crawlers know the location of the sitemap.xml files using the robots.txt file.

You can also check the robots.txt file online using a URL like example.com/robots.txt.

This is an example of a robot.txt file.

Robots.txt Example ( https://www.apple.com/robots.txt )

# robots.txt for http://www.apple.com/

User-agent: *

Disallow: /*/includes/*

Disallow: /*retail/availability*

Disallow: /*retail/availabilitySearch*

:

Sitemap: https://www.apple.com/shop/sitemap.xml

Sitemap: https://www.apple.com/autopush/sitemap/sitemap-index.xml

Sitemap: https://www.apple.com/newsroom/sitemap.xml Sitemap: https://www.apple.com/retail/sitemap/sitemap.xml Sitemap: https://www.apple.com/today/sitemap.xml

Similarly to the sitemap.xml file, you need to place the robots.txt file in the website's root directory.

To promote better indexing, ensure your robots.txt file doesn't inadvertently block access to important pages you want indexed.

Implement canonical tags (link tag)

Content duplication can be penalized by search engines. When you have similar or duplicate content across multiple URLs, use the canonical tag to specify which version of the page you want to be considered as the authoritative (canonical) one. This helps prevent duplicate content issues.

This is an example of the canonical tag.

Canonical tag example

<link rel="canonical" href="https://example.com/example-page">

There are several checkpoints when you implement the canonical tag.

- Use canonical URLs consistently: Ensure you use the same protocol (http vs. https) and subdomain (www vs. non-www) because different protocols and subdomains are considered as different URLs by search engines.

- Use absolute URLs: Always use the absolute URL (full path) in the href attribute of the canonical link, not a relative URL. This avoids confusing search engines.

- Use canonical URLs in sitemap.xml: Include only the canonical URLs of your content in your XML sitemap.

Setting the canonical tag for all indexed pages is not mandatory, but it is recommended because it helps to prevent potential issues related to duplicate content and consolidate link signals for similar or identical content.

The canonical tag is also related to redirection settings and hreflang tags. For redirection settings, check the material below. For the hreflang tag, check International SEO Basics.

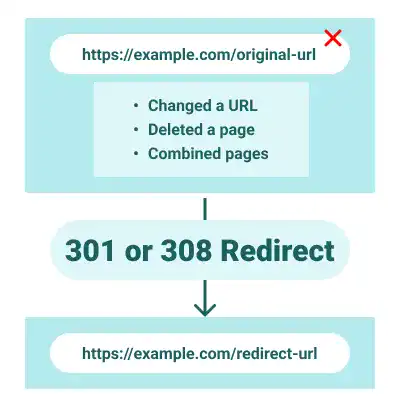

Manage redirections correctly

As the website evolves, some pages may need to change their URLs. If web pages have been indexed for a while, they may already have good backlinks, adding value (link juice) to the pages. If the page has been permanently moved, set 301 or 308 redirections to avoid losing this accumulated value.

The method of setting redirections varies depending on the technology platform. Most platforms use 301 redirection, but some may offer 308 redirection settings.